Nvidia Grows Open Source AI with Slurm Acquisition, Nemotron 3 Release

Nvidia is aggressively expanding its open source footprint, signaling a dual strategy to control the infrastructure and tools powering the next generation of AI agents.

In a significant move to secure the backbone of AI workloads, Nvidia announced the acquisition of SchedMD, the company behind the widely used open source workload management system Slurm. Founded in 2010 by the original lead developers of Slurm, SchedMD has been the primary steward of the software used in high-performance computing (HPC) clusters. Nvidia confirmed the deal Monday, stating that SchedMD will continue to maintain Slurm as an open source, vendor-neutral platform. While financial terms remain undisclosed, Nvidia emphasized its decade-long partnership with SchedMD and plans to accelerate Slurm’s integration across diverse systems, calling the technology “critical infrastructure for generative AI.”

This acquisition underscores Nvidia’s understanding that the future of AI relies as much on orchestration software as it does on silicon. By bringing the core developers of Slurm in-house, Nvidia ensures the stability and evolution of the software that manages complex compute jobs—jobs that increasingly involve training massive AI models and running multi-agent systems. Nvidia’s commitment to keeping Slurm vendor-neutral is a strategic nod to the open source community, ensuring that the tool remains a standard across the industry rather than a closed proprietary system.

Simultaneously, Nvidia is democratizing access to high-end AI capabilities with the release of its new Nemotron 3 model family. Unveiled Monday, Nemotron 3 is positioned as the most efficient open model family for building accurate AI agents. The release consists of three distinct tiers tailored for specific use cases: Nemotron 3 Nano, designed for targeted, lightweight tasks; Nemotron 3 Super, optimized for multi-agent applications; and Nemotron 3 Ultra, engineered to tackle the most complicated reasoning tasks.

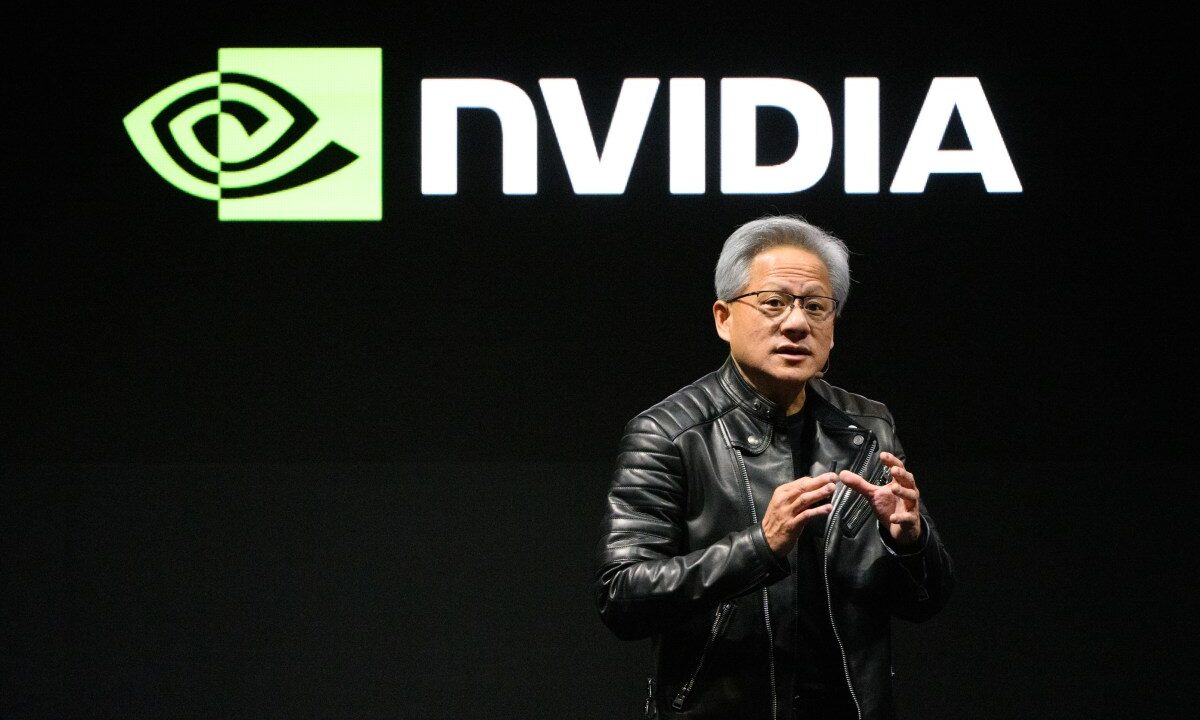

Jensen Huang, Nvidia’s founder and CEO, framed the release as a pivotal moment for developer access. “Open innovation is the foundation of AI progress,” Huang stated. “With Nemotron, we’re transforming advanced AI into an open platform that gives developers the transparency and efficiency they need to build agentic systems at scale.”

The launch of Nemotron 3 is not an isolated event but part of a broader pivot toward open innovation by the semiconductor giant. Just last week, Nvidia introduced Alpamayo-R1, an open reasoning vision language model focused on autonomous driving research. Additionally, the company has been expanding workflows and guides for its open-source Cosmos world models, which operate under a permissive license to help developers build physical AI systems.

These moves collectively highlight Nvidia’s strategic bet: physical AI—specifically robotics and autonomous vehicles—is the next major frontier for GPU demand. While training large language models consumes massive compute power today, physically intelligent systems require complex simulation, reasoning, and real-time processing. By offering both the infrastructure management tools (via Slurm) and the open model frameworks (via Nemotron and Cosmos), Nvidia is positioning itself as the indispensable partner for companies building the “brains” behind autonomous machines. This integrated approach aims to lower the barrier to entry for developers while simultaneously locking them into a robust ecosystem of hardware and software optimized for Nvidia’s architecture.

No Comments