Adobe Firefly Unlocks Deterministic Video Editing and New AI Models for Creators

Say goodbye to total re-gens: Firefly’s new timeline editor and third-party AI models put precise video control directly in the prompt.

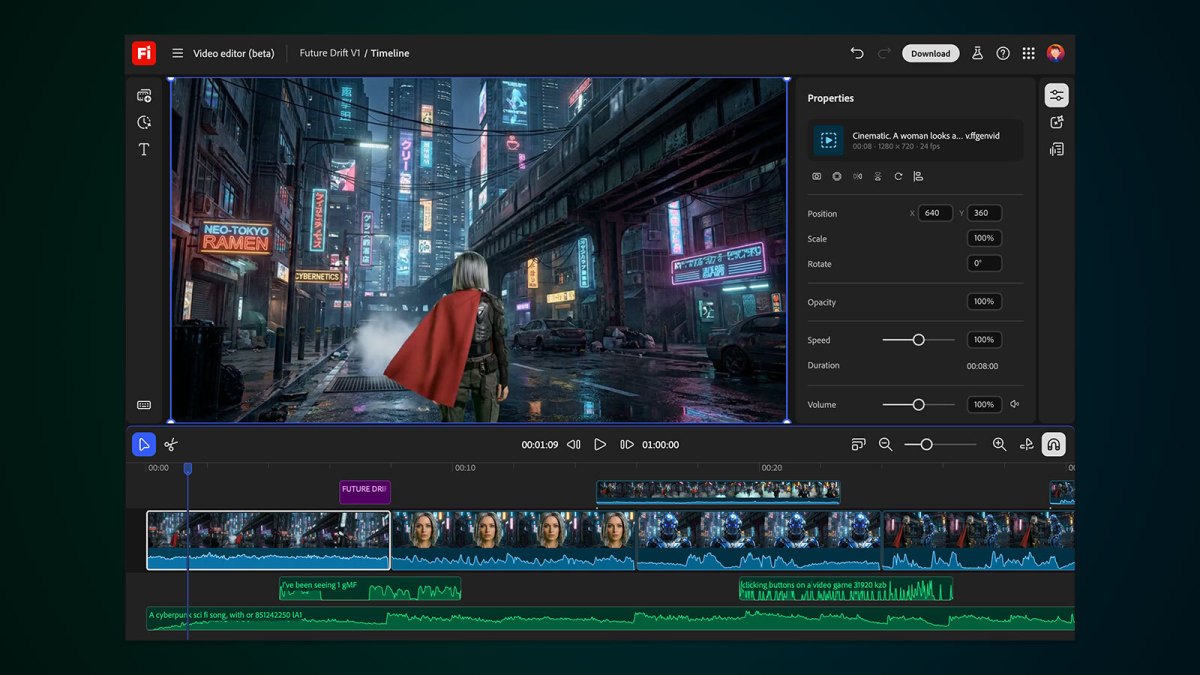

Adobe is rolling out a major update to its Firefly AI video app, moving from prompt-only generation to true in-app editing with a new video editor. Instead of recreating a clip when a single element misses the mark, creators can now edit elements, colors, and camera angles using text prompts and a new timeline view that makes frame-by-frame adjustments straightforward.

The new editor (initially in private beta since October) uses Runway’s Aleph model for precise instruction-based changes. It supports prompts like “Change the sky to overcast and lower the contrast” or “Zoom in slightly on the main subject.” Adobe’s own Firefly Video Model adds reference-based camera control: upload a start frame and a reference video of a camera motion, then instruct Firefly to replicate that angle in your edit.

Beyond editing, Adobe is expanding its model lineup within Firefly. Black Forest Labs’ FLUX.2 image generation model is now available across platforms, with full integration in Adobe Express beginning in January. For upscaling, Topaz Labs’ Astra model joins the workflow, letting creators boost resolution to 1080p or 4K directly in the app. A new collaborative boards feature is also included to streamline team-based creative review and iteration.

To accelerate adoption, Adobe is making unlimited generations from all image models and the Adobe Firefly Video Model available until January 15 for subscribers on Firefly Pro, Firefly Premium, 7,000-credit, and 50,000-credit plans. This promotional window is a clear response to the fast-moving wave of generative video tools from competitors, giving paid users a frictionless runway to test new workflows.

This update is the latest step in Firefly’s rapid evolution. Earlier this year, Adobe launched tiered subscriptions for image and video generation, followed by the Firefly web app and mobile apps. The addition of third-party models like FLUX.2 and Astra signals a more open approach, letting creators mix best-in-class tools while keeping the workflow inside Adobe’s ecosystem.

For creators and marketers, the implications are practical:

– Iteration without the reset: Prompt-based edits reduce the time between idea and final cut, especially for color grading, background swaps, and camera re-framing.

– Consistent camera language: Reference-driven motion helps match sequences and maintain shot continuity across edits.

– Higher polish at the end: Built-in upscaling to 4K gives polished output without leaving the app.

– Team agility: Collaborative boards streamline approvals and feedback loops, especially for campaigns with tight deadlines.

The new editor’s timeline view is particularly important for mobile-first and social creators. It brings responsive, on-the-go control over frames, sounds, and characteristics, meeting the demand for fast-turn content without sacrificing creative precision. For teams, having all these tools in one place reduces handoffs between apps and keeps brand assets centralized.

Adobe’s strategy is clear: combine its own Firefly models with curated third-party options, making Firefly a flexible hub rather than a walled garden. The limited-time unlimited access for paid plans through mid-January is a strong incentive to test the editor’s capabilities, especially for teams planning Q1 campaigns. As the generative video space gets more competitive, workflow depth—editing, reference, upscaling, collaboration—will be as important as raw generation quality. Firefly’s latest update leans into that reality, giving creators more determinism over the output and saving time on common post-production tasks.

No Comments