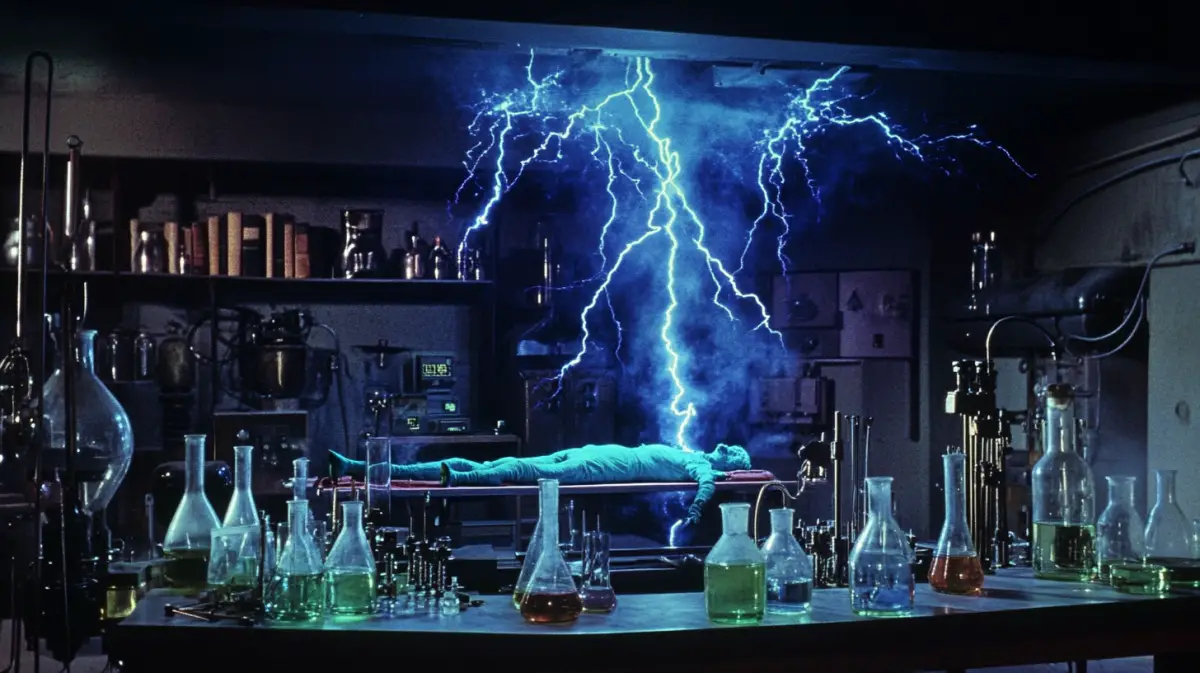

As we navigate the complexities of artificial intelligence, a fundamental question arises: can machines truly think and experience the world like humans? The concept of thinking machines remains a psychological construct, with AI applications unable to shape human existence in novel ways. Despite their utility, AI systems operate within programmed parameters, lacking consciousness, autonomous thought, and genuine engagement with the world. The gap between human consciousness and artificial systems is ontological, rooted in the nature of embodied experience and authentic engagement.

Philosopher Alva Noë argues that computers “don’t actually do anything” because they are not autonomous and don’t engage with the world as self-sufficient beings. AI models are neither morally responsible nor actively engaged with the world, working within predetermined frameworks to deliver specific outputs. Noë emphasizes that we are the authors of the story of technology, and it didn’t write itself. Large language models are tools developed by human expertise, but they lack the capacity for self-reflection, meaningful relationships, and authentic experience.

The embodiment argument suggests that human intelligence cannot be replicated by large language models due to their lack of physical embodiment. However, Andreas Matthias counters that LLMs can reason about bodily functions, emotions, and fears without having a body, using human language and experience to train AI models. Matthias argues that LLMs exhibit agency, even if not human autonomy, and can perform tasks that pencils, shoes, and calculators cannot, such as explaining complex concepts, understanding questions, and creating art.

The fear of thinking machines remains ungrounded, not because AI systems cannot replicate human minds, but because they can still pose a threat to human livelihoods and societies. AI can create artworks, translate languages, and write emails, making human workers redundant. The danger of AI lies not in its autonomy, but in its potential to be used as a tool for control and oppression. As AI technologies evolve, we must ensure that they remain a tool for human empowerment, rather than a weapon of control.

Ultimately, the myth of thinking machines highlights the need for a nuanced understanding of AI’s capabilities and limitations. While AI systems can process language and simulate thought, they lack the depth and complexity of human experience. As we continue to develop and integrate AI into our lives, we must prioritize human values and agency, ensuring that these technologies serve to augment, rather than replace, human connection and creativity. By acknowledging the boundaries between human and artificial intelligence, we can harness the potential of AI to enhance our world, while preserving the essence of what makes us human.

https%3A%2F%2Fdaily-philosophy.com%2Fzurkic-matthias-thinking-machines%2F

No Comments